Immaterial rights related to source code

Computer software in general can be protected legally using three distinct mechanisms: copyright, patents and as trade secrets. In our case, trade secrets do not apply as we’re talking about public code here. Software patents can apply if something you're doing is infringing on a patent – but as software patents focus more on “solutions” than specific source code, that risk is not directly related to the use of Copilot, and Copilot should not add extra dimension to watch out for. Our focus here is on copyright.

Copyright grants a group of rights both economic and moral in nature: economic rights, such as making copies, public performance and right to distribution, and moral rights, such as right of attribution, right to have a work published and right of integrity. In principle, anyone who creates a written or a creative work is entitled to a copyright of that work. This is further clarified for computer programs in the computer programs directive 2009/24/EC, of which article 1 states that “A computer program shall be protected if it is original in the sense that it is the author's own intellectual creation. No other criteria shall be applied to determine its eligibility for protection.” It also states that “Protection in accordance with this Directive shall apply to the expression in any form of a computer program.” Based on legal practice, for example case C-406/10 SAS Institute, we know that source code is such an expression of a computer program. In summary, if a source code is the author’s own intellectual creation, it is protected. The scope of the protection includes, among other things, the exclusive rights of “the permanent or temporary reproduction of a computer program by any means and in any form, in part or in whole” (article 4). This does sound strict, but there are some exceptions and limitations. Additionally, a way to grant rights to users based on copyright is generally licensing; that’s what all those licence texts are for.

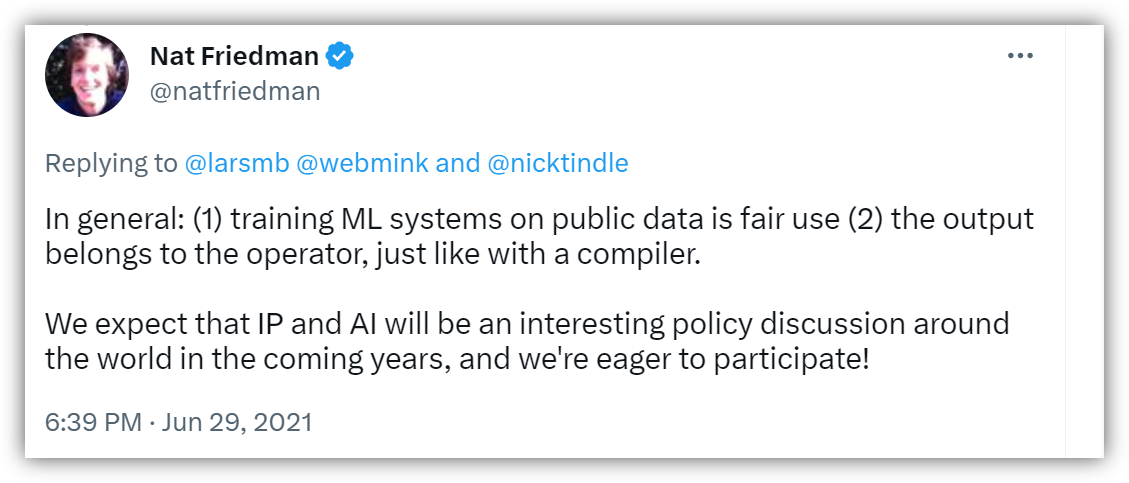

When immaterial rights of the source code GitHub Copilot uses were discussed, then-CEO of GitHub, Nat Friedman, responded in Twitter with the following:

So the argument is twofold: training of the model is fair use, and output belongs to the operator of the tool. Let’s take a look at these arguments.

“Fair use” argument

“Fair use” is a doctrine in US law which permits limited use of copyrighted material without prior approval from the copyright holder if the use is deemed acceptable. As the training of the data has presumably happened in the US, the argument is relevant as the legality will be judged based on where the action has been performed. There is some merit to this claim, as in for example Authors Guild v Google, where Google scanned copyrighted books to create search and analytics tools as well as showing snippets of text to end users, action was indeed found by court to be fair use. It is beyond the scope of this writing if the argument works also here or not, but the thing to note here is that discussion is only relevant to training the model, not generating or using the code. An end user generating the code couldn't be considered fair use if only for the reason that "fair use" is not part of and not compatible with EU copyright legislation: we don’t have the same doctrine.

“Output belongs to the operator” argument

This argument can be understood in two ways: either the operator of the tool has created a derivative work using the tool and as such owns the work as a separate creation, or AI has “created” it.

In EU intellectual property legislation, the “author” of a work refers to a natural person, a group of natural persons or a legal person, and authorship can only be obtained by a natural or a legal person in EU law. This means that if a work would be considered to have been created by a computer program, in the current system it would not have an author and thus it would be void of immaterial rights meaning it could be generated by Copilot and offered to its users without issues. A derivative work, in contrast, generally requires permission from copyright holders of the original work, which would be problematic for Copilot.

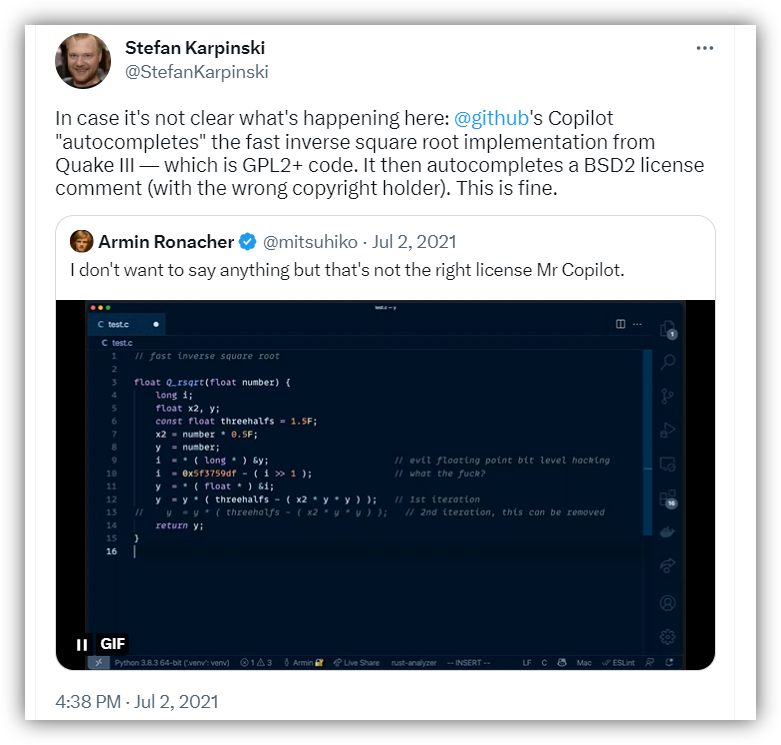

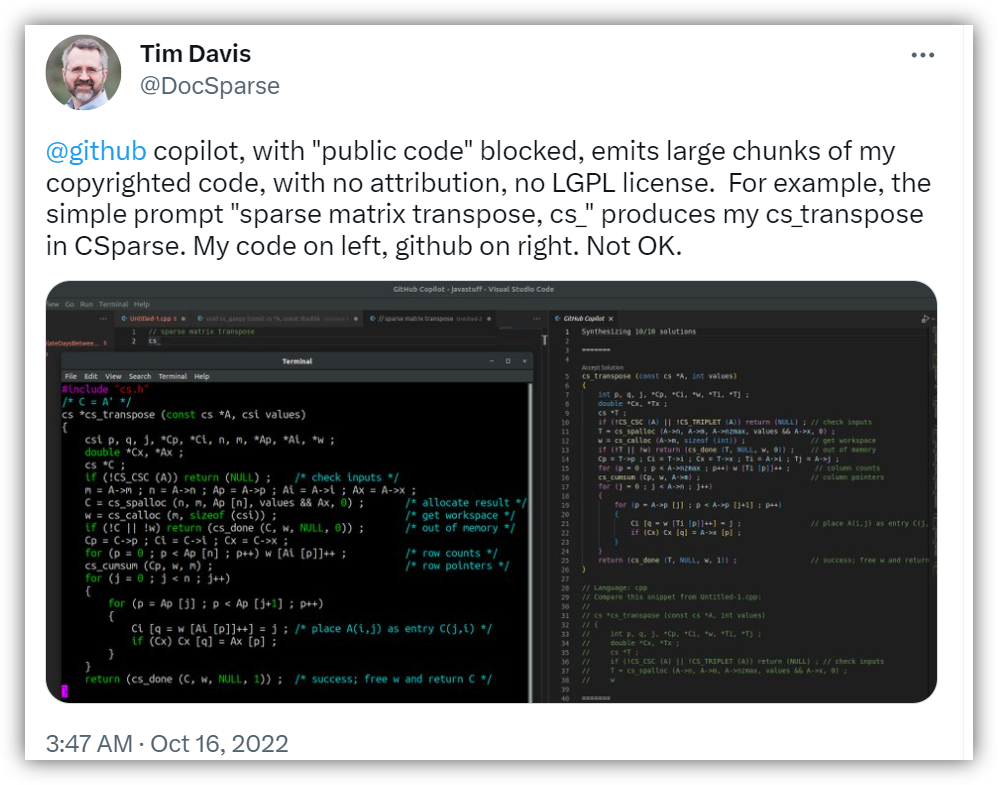

The issue to raise here is that we’ve seen that in practice, the work GitHub Copilot creates can be really close to some original work (if not identical). This behavior has been raised on Twitter with example cases in 2021 and 2022:

It would appear that Copilot is in some cases generating someone else’s work verbatim and we are unable to see the input of Copilot or the operator having had any effect here. As such, it is hard to claim that this was “created by AI” and void of copyright issues. It would seem believable to claim this is a derivative work or even a reproduction of original work, requiring relevant permissions as well as attribution. The threshold for requiring permission from the original copyright holders is quite low, as seen in European Court of Justice landmark case C-5/08 Infopaq. In that case, news articles were scanned for predetermined keywords and when found, a keyword and the following and preceding five words were printed out into a new document. This document was deemed to be a derivative work requiring permission from the copyright holders.

In practice, for Copilot this could be a question of implementation details that can be addressed with upcoming improvements. A question of legality could be based on the fact that if the tool can ensure the suggestions generated would be original enough to not violate the rights of copyright holders. It is not easy to suggest what could be the ultimate criteria in deciding this, but an analysis of originality would seem to be in order.

Albert Ziegler, Principal Machine Learning Engineer at GitHub, wrote about this issue in GitHub blog in 2021, announcing plans to include prefilters in the tool to report any cases of quoting a source verbatim. In June 2022, the tool introduced a setting to block suggestions matching public code which can be enabled by the user. However, as can be seen from Tim Davis’ example from October 2022, there still seems to be issues with it.

GitHub Terms argument

One argument proposed is that the terms of submitting code to GitHub are formulated so they would allow tools such as GitHub Copilot to work with that code. Currently the license terms do grant permissions that could apply to training the machine learning (ML) model of the tool and even using it as a basis of the tool. The terms are a contractual agreement between code submitter and GitHub, but as they do not confer rights to third parties like a user of GitHub Copilot, they don’t seem to apply generating the code or for users of Copilot to claim that code as their own.

The terms could possibly be changed in a way that they would grant permissions for a user of GitHub Copilot. However, in the EU, any clause in terms and conditions that describes a waiving or transfer of moral rights is void, even though the law provides that the author may consent to certain actions or omissions that would otherwise constitute an infringement of their moral rights. That is likely not enough in this case, as in general the ownership of the code you submit for your employer needs to be transferable to the employer.

Also, we must note not all code used by Copilot comes from GitHub.

The DSM directive

As mentioned before, European Union law does not recognize fair use doctrine but instead builds on explicit exceptions to copyright. In the summer of 2019, the DSM directive (2019/790) was adopted, providing two copyright exceptions related to data mining – one for scientific use and the other for general purpose use. They provide the legal basis for data mining of copyrighted data in the EU.

Article 4 of the directive, the general purpose exception, provides that member states need to “provide for an exception or limitation to” immaterial rights “for reproductions and extractions of lawfully accessible works and other subject matter for the purposes of text and data mining.” “Reproductions and extractions […] may be retained for as long as is necessary for the purposes of text and data mining.”, it adds, and ”the exception or limitation […] shall apply on condition that the use of works and other subject matter […] has not been expressly reserved by their rightholders in an appropriate manner, such as machine-readable means in the case of content made publicly available online.” In other words, the directive allows for reproduction only for the purpose of, and duration of, data mining, and it requires that reservations by the rightholder need to be upheld. We have already discussed the possible issue of derivative work and reproduction, and additionally GitHub has not communicated any way for a rightsholder to opt out of being used as training material for Copilot, which would be a violation of the directive assuming any data mining happens within the context of EU legal framework.

Conclusions and recommendations

GitHub Copilot is a promising tool. However, we can see some behavior as clearly problematic, and even if the most problematic behavior would be altered, the question of legal status of generated code is essentially still not decided.

As such, my recommendation for a programmer is to use caution if submitting code that Copilot generates. The ultimate responsibility for fulfilling any licensing and attribution requirements of the submitted code lies with the end user. Therefore, if the code is submitted, at this time it is strongly advised that the end user personally verifies these obligations to the best of their ability.

Vincit’s principles for using generative AI can be found here.